Trish Rose-Sandler, Missouri Botanical Garden, Biodiversity Heritage Library

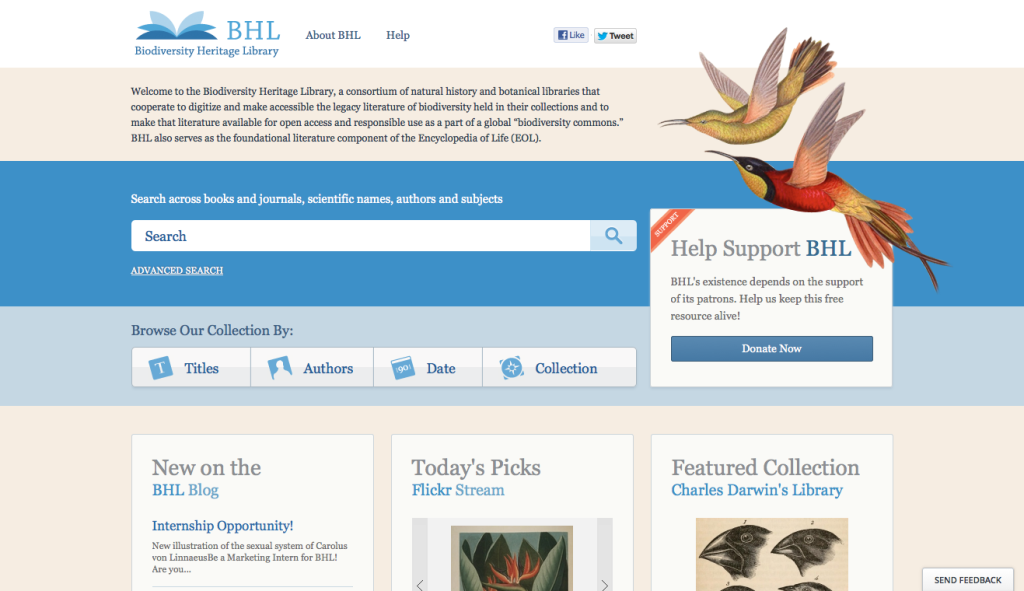

I work at the Missouri Botanical Garden in St. Louis and was hired there to work on the Biodiversity Heritage Library (BHL). BHL is a consortium of natural history museums and botanical garden libraries that come together and digitize their collections and put them up online for open access as part of a global “biodiversity commons.” Today at our portal we have about 46 million pages of text that we have digitized about plants and animals. So we have this critical mass of data and wanted to do things with it, and crowdsourcing was a way to do that.

We have been engaged in crowdsourcing activities for several years. As part of our day-to-day digitization activities, we have folks who give us feedback on the types of content they would like us to scan as well as helping us to correct bibliographic metadata. We also use crowdsourcing to tackle particular data access challenges through grant-funded projects. We currently have three and I will give you a brief synopsis of those. We have two from IMLS and one from NEH. One of the IMLS-funded grants is called Purposeful Gaming and BHL, and in that project we are testing the effectiveness of gaming for crowdsourcing OCR text correction. Tiltfactor is designing the games for us and those games are going to go live in late May or early June. The other IMLS grant is called Mining Biodiversity and this was part of the international Digging into Data Challenge. That project is using crowds to verify the accuracy of semantic markup of text that was done by automated algorithms.

And then we have a third project funded by NEH called Art of Life, which just ended last month. This project came about because in addition to these millions of books and journals, we have within them beautiful natural history illustrations which are not findable right now because we don’t have any metadata about them. The Art of Life goal was to develop algorithms to find out which pages had illustrations, and then we crowdsourced the classification and description of them.

The two key points that we have learned from all of our different crowdsourcing activities have been that crowdsourcing is a really effective way to accomplish a task that you otherwise just couldn’t do with the limited resources that you have, and it’s a great way to engage people in dialog about your content. But you also need to be aware that crowdsourcing puts a strain on your staff time. There is the need to either go out and find a tool that exists and adopt it or build your own. There is the need to figure out how to use a tool with your local system and how to get data in there and how to get data out of there, which can take a lot of time. Then, of course, there is bringing that data back into your local system and figuring out how to blend it with the data you have. Finally, when you open things up to users you have to be responsive and you have to account for the time you have to spend answering their questions and addressing their problems.

This presentation was a part of the workshop Engaging the Public: Best Practices for Crowdsourcing Across the Disciplines. See the full report here.

![[Video] Afrocrowd Works With Libraries and Museums to Improve Representation on Wikipedia](https://www.crowdconsortium.org/wp-content/uploads/Screen-Shot-2015-11-23-at-4.04.07-PM-500x383.png)

![[Video] Cooper Hewitt Part 2: Building a More Participatory Museum](https://www.crowdconsortium.org/wp-content/uploads/21900187798_0a03dfec0a_k-500x383.jpg)

![[Video] Cooper Hewitt’s Micah Walter on Museums and #Opendata](https://www.crowdconsortium.org/wp-content/uploads/Screen-Shot-2015-11-10-at-2.25.30-PM-500x383.png)